Unpacking the Edge Compute Hype: What It Really Is and Why It’s Important

The tech industry has always been a prolific producer of hype and right now, no topic is mentioned more generically than that of “edge” and “edge compute”. Everywhere you turn these days, vendors are promoting their “edge solution”, with almost no definition, no real-world use cases, no metrics around deployments at scale and a lack of details on how much revenue is being generated. Making matters worse, some industry analysts are publishing reports saying the size of the “edge” market is already in the billions. These reports have no real methodology behind the numbers, don’t define what service they are covering and talk to non-realistic growth and use cases. It’s why the moment edge compute use cases are mentioned, people always use the examples of IoT, connected cars and augmented reality.

Part of the confusion in the market is due to rampant “edge-washing”, which is vendors seeking to rebrand their existing platforms as edge solutions. Similar to how some cloud service providers call their points of presence the edge. Or CDN platforms marketed as edge platforms, when in reality, the traditional CDN use cases are not taking advantage of any edge compute functions. You also see some mobile communications providers even referring to their cell towers as the edge and even a few cloud based encoding vendors are now using the word “edge” in their services.

Growing interest among the financial community in anything edge-related is helping fuel this phenomenon, with very little understanding of what it all means, or more importantly, doesn’t mean. Look at the valuation trends for “edge” or “edge computing” vendors and you’ll see there is plenty of incentive for companies to brand themselves as an edge solution provider. This confusion makes it difficult to separate functional fact from marketing fiction. To help dispel the confusion, I’m going to be writing a lot of blog posts this year around edge and edge compute topics with the goal of separating facts from fiction.

The biggest problem is that many vendors are using the phrase “edge” and “edge compute” interchangeably and they are not the same thing. Put simply, the edge is a location, the place in the network that is closest to where the end user or device is. We all know this term and Akamai and been using it for a long time to reference a physical location in their network. Edge computing refers to a compute model where application workloads occur at an edge location, where logic and intelligence is needed. It’s a distributed approach that shifts the computing closer to the user or device being used. This contrasts with the more common scenario where applications are run in a centralized data center or in the cloud, which is really just a remote data center usually run by a third-party. Edge compute is a service, the “edge” isn’t. You can’t buy “edge”, you are buying CDN services that simply leverage an edge-based network architecture that perform work at the distributed points of presence closest to where the digital and physical intersect. This excludes basic caching and forwarding CDN workflows.

When you are deploying an application, the traditional approach would be to host that application on servers in your own data center. More recently, it is likely you would instead choose to host the application in the cloud, with a cloud service provider like Amazon Web Services, Microsoft Azure or the Google Cloud Platform. While cloud service providers do offer regional PoPs, most organizations typically still centralize in a single or small number of regions.

But what if your application serves users in New York, Rome, Tokyo, Guangzhou, Rio di Janeiro, and points in between? The end-user journey to your content begins on the network of their ISP or mobile service provider, then continues over the Internet to whichever cloud PoP or data center the application is running on, which may be half a world away. From an architectural viewpoint, you have to think of all of this as your application infrastructure, and many times the application itself is running far, far away from those users. The idea and value of edge computing turns this around. It pushes the application closer to the users, offering the potential to reduce latency and network congestion, and to deliver a better user experience.

Computing infrastructure has really evolved over the years. It began with “bare metal,” physical servers running a single application. Then virtualization came into play, using software to emulate multiple virtual machines hosting multiple operating systems and applications on a single physical server. Next came containers, introducing a layer that isolates the application from the operating system, allowing applications to be easily portable across different environments while ensuring uniform operation. All of these computing approaches can be employed in a data center or in the cloud.

In recent years, a new computing alternate has emerged called serverless. This is a zero-management computing environment where an organization can run applications without up-front capital expense and without having to manage the infrastructure. While it is used in the cloud (and could be in a data center—though this would defeat the “zero management” benefit), serverless computing is ideal for running applications at the edge. Of course, where this computing occurs matters when delivering streaming media. Each computing location, on-premises, in the cloud and at the edge, has its pros and cons.

- On-premises computing, such as in an enterprise data center, offers full control over the infrastructure, including the storage and security of data. But it requires substantial capital expense and costly management. It also means you may need reserve server capacity to handle spikes in demand-capacity that sits idle most of the time, which is an inefficient use of resources. And an on-premises infrastructure will struggle to deliver low-latency access to users who may be halfway around the world.

- Centralized cloud-based computing eliminates the capital expense and reduces the management overhead, because there are no physical servers to maintain. Plus, it offers the flexibility to scale capacity quickly and efficiently to meet changing workload demands. However, since most organizations centralize their cloud deployments to a single region, this can limit performance and create latency issues.

- Edge computing offers all the advantages of cloud-based computing plus additional benefits. Application logic executes closer to the end user or device via a globally distributed infrastructure. This dramatically reduces latency and avoids network congestion, with the goal of providing an enhanced and consistent experience for all users.

There is a trade-off with edge computing, however. The distributed nature of the edge translates into a lower concentration of computing capacity in any one location. This presents limitations for what types of workloads can run effectively at the edge. You’re not going to be running your enterprise ERP or CRM application in a cell tower, since there is no business or performance benefit. And this leads to the biggest unknown in the market today, that being, which application use cases will best leverage edge compute resources? As an industry, we’re still finding that out.

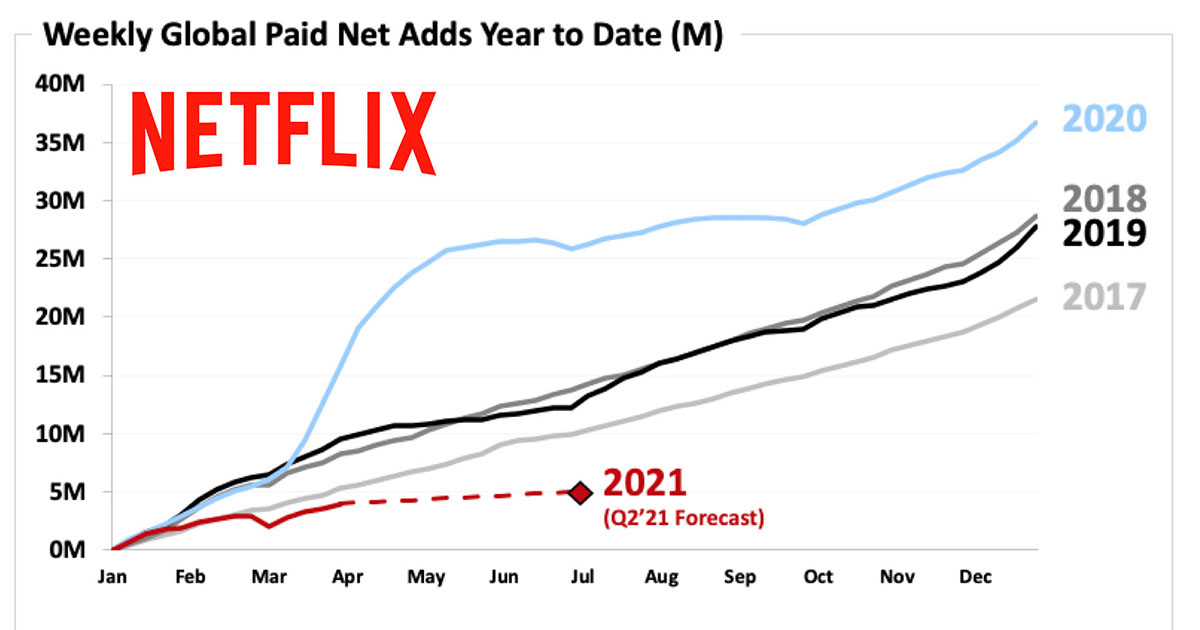

From a customer use case and deployment standpoint, the edge computing market is so small today that both Akamai and Fastly have told Wall Street that their edge compute services won’t generate significant revenue in the near-term. With regards to their edge compute services, during their Q1 earnings call, Fastly’s CFO said, “2021 is much more just learning what are the use cases, what are the verticals that we can use to land as we lean into 2022 and beyond.” Akamai, which gave out a lot of CAGR numbers at their investor day in March said they are targeting revenue from “edge applications” to grow 30% in 3-5 years, inside their larger “edge” business, with expected overall growth of 2-5% in 3-5 years.

Analysts that are calling CDN vendors “edge computing-based CDNs” don’t understand that most CDNs services being offered are not levering any “edge compute” services inside the network. You don’t need “edge compute” to deliver video streaming, which as an example, made up 57% of all the bits Akamai’s delivered in 2020, for their CDN services, or what they call edge delivery. Akamai accurately defines the video streaming they deliver as “edge delivery”, not “edge compute”. Yet some analysts are taking the proper terminology vendors are using and swapping that out with their own incorrect terms, which only further adds to the confusion in the market.

In simple terms, edge compute is all about moving logic and intelligence to the edge. Not all services or content needs to have an edge compute component, or be stored at or delivered from the edge, so we’ll have to wait to see which applications customers use it for. The goal with edge compute isn’t just about improving the use experience but also having a way to measure the impact on the business, with defined KPIs. This isn’t well defined today, but it’s coming over the next few years as we see more uses cases and adoption.

This morning it was

This morning it was