The Seriousness of Device Fragmentation and How It Impacts Streaming Services

Device fragmentation has become an escalating challenge for streaming services, as launching, managing, and ensuring QoE for viewers across growing devices eventually becomes overwhelming. From multiple operating systems and platforms to the constant software updates, offering a consistent experience on all devices places a heavy burden on development teams, requiring a broad, yet specialized skill set.

It also affects every company’s bottom line as supporting more devices will ultimately expand their addressable viewer base, especially for FAST services where it will increase the number of possible ad views. Generally, audience reach is the first piece analyzed when evaluating devices but technical challenges also need to be considered, particularly the challenge of testing playback on each and every device viewers use.

With more video options than ever, viewers are demanding the highest QoE, particularly one that will give them continuity in playback when transitioning from device to device. Streaming services tell me that putting the systems in place to test and provide and maintain that level of service can become a very costly, time-consuming, and formidable challenge.

The number of devices in the market that streaming services have to support is pretty deep, especially when you account for both new models and older hardware still in use. The competitiveness between device manufacturers is fueling this increase as they need to consistently upgrade or introduce new products for users to adopt, which can result in a new or updated OS, wifi technology, and even codebases that need to be supported. With many streaming services now being offered globally, and no industry standardization across them like we see with STBs, the difficult decisions and work to support and/or add new devices into existing offerings falls on the streaming services themselves.

A great example of the difficulties streaming services face when wanting to add new devices is how each manufacturer is pushing out a different operating system which can be seen in smart TVs, such as Samsung Tizen OS, LG webOS and Vizio SmartCast. This is good for device manufactures when it comes to how they position themselves with consumers, but for streaming services, it means they need to consider the differences when trying to offer a consistent video experience across platforms. Having their own OS has become a source of revenue (See Vizio’s Q2 Earnings), meaning this trend will continue, and new and existing vendors will look to develop their own. From browsers, phones, and TV suppliers to USB devices and gaming consoles, it seems like there is no end to the ever-expanding device growth, which has become the norm.

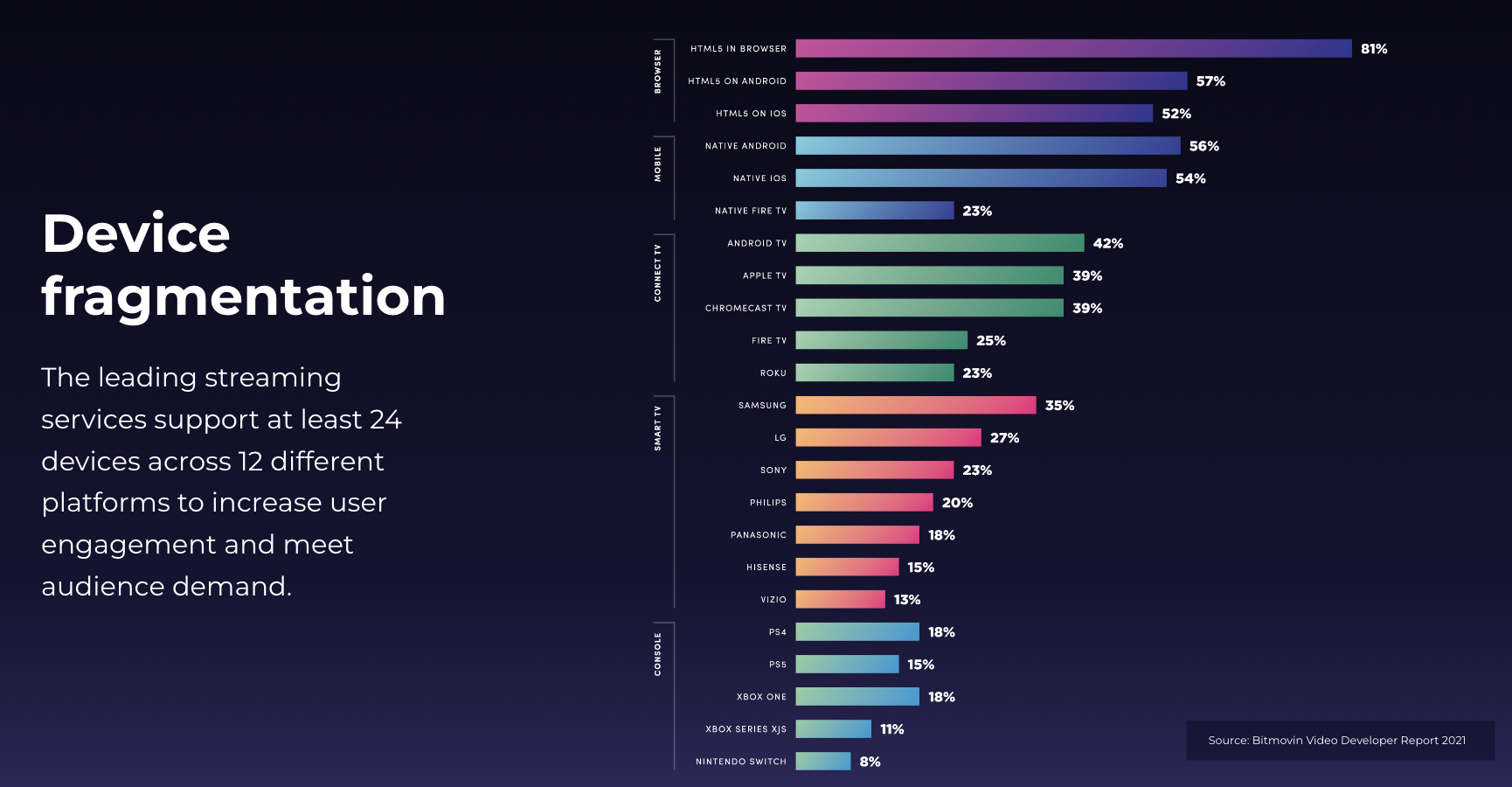

A great example of streaming device fragmentation can be seen in Bitmovin’s video developer report from 2021, which surveys developers across the industry. It shows how leading streaming services are now supporting at least 24 devices across 12 different platforms and will be looking to support additional ones with their offerings.

When streaming services and their development teams decide which devices they want to support playback on, player testing processes will need to be defined via either manual testing or automated testing. Just like everything, there are pros and cons to both approaches. There is a high cost in terms of having to find and maintain the supported devices, especially with manual testing as it requires the in-house or external dev team to build out a testing regimen that they must physically do and observe with every release. Automated testing comes at a high expense as well, as it’s built either from scratch in-house or is a SaaS barebones solution that dev teams will have to implement use cases into. In the long term, this option tends to become more cost-effective and stable as video services don’t constantly have to have their teams dedicated to doing manual work.

The timeline to implement testing structures is extremely relevant as different factors go into it such as procurement and maintenance of devices and the development of player use cases that could differ depending on the model of the device supported. For example, LG TVs from 2016 aren’t able to be tested with all of the same use cases as later models such as period switches during playback, where the encrypted video is changed to an unencrypted client-side ad and then back to the encrypted stream. Use cases are also not one and the same as they depend on the experience streaming services are trying to provide, whether it be testing for video qualities or ad transitions. Going back to device procurement, as viewers don’t change devices often and for smart TVs that could be over 5 years, obtaining older models is another process that can limit your team’s ability to guarantee consistent quality playback from device to device.

Choices are already being made as to which platforms and versions of devices to officially support by every streaming service. Unless someone discovers an endless supply of video-centric developers (wishful thinking of many out there), it could become too costly and unmanageable for some streaming services to maintain their same QoE on every platform and they’ll need to seek other solutions that offer effective ways to combat this issue. This is the very reason why Bitmovin recently released Stream Lab, a cloud based platform that enables development teams to easily test their streams in real environments on physical devices and receive transparent reporting with clear performance feedback. With the complexity of testing across so many devices and platforms, I suspect we will continue to see more third-party solutions streaming services can rely on.