Netflix Removes Support for Casting From Most Devices

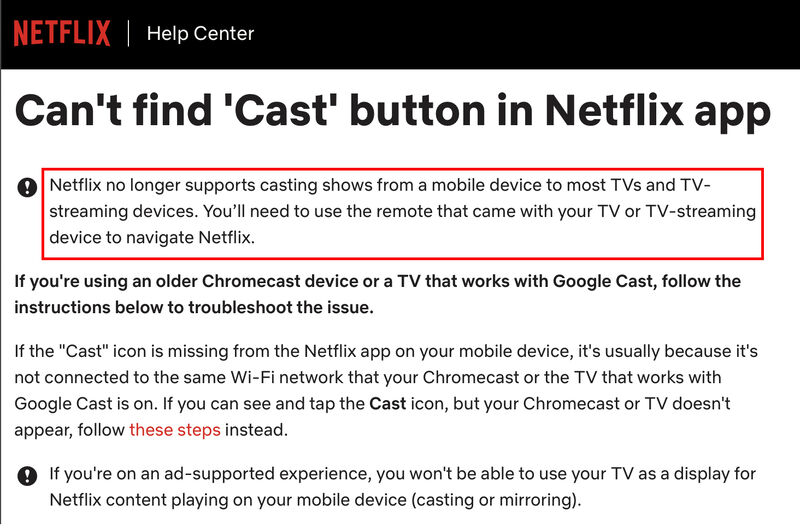

Netflix just made its service less user-friendly when traveling. Netflix announced it no longer supports casting shows from mobile devices to most TVs and streaming devices. Casting will now only work on older Chromecast models without remotes and TVs with Google Cast, regardless of whether you’re on an ad-supported or ad-free Netflix plan.

This is a bad decision, and it will impact users who stream Netflix in hotel rooms, where the hotel often only allows casting and doesn’t let you plug a device into the TV’s HDMI port. Even when you can access the HDMI ports on hotel TVs, the remote is often limited in functionality and won’t let you switch inputs. For this reason, I always throw Samsung, LG, and Vizio remotes in my bag. This change will also affect users who cast to a projector where no native app is available.

Netflix hasn’t said why it is dropping support for casting, but it is an odd approach for a company that says it always thinks about the service from a user standpoint. I can only guess that the motive was to make it harder to share accounts since mobile devices are a loophole around account-sharing restrictions.