Clearing Up The Cloud Confusion re: Amazon, Disney, Hulu, BAMTech, Akamai and Netflix

Over the past few days there has been a lot of infrastructure news surrounding how video is delivered from third-party content delivery networks. Between the news around Disney’s upcoming OTT services, Hulu using Amazon for their live streaming service, and BAMTech now being 75% owned by Disney, some in the media are making inaccurate statements.

Lets start with the press release that Hulu is using Amazon’s CDN CloudFront to help deliver some of their streams for Hulu’s new live service. This isn’t really “new” news as Hulu confirmed for me in May they were using Amazon, along with Akamai for their live streams and other CDNs as well for their VOD content. For live stream ingestion, Hulu is taking all of the live signals via third-party vendors including BAMTech and iStreamPlanet. What the Hulu and Amazon tie up does show us is just how commoditized the service of delivering video over the Internet really is. Nearly every live linear OTT service is using a multi-CDN approach, even for their premium service. Case in point, AT&T is using Level 3, Limelight and Akamai for their DirecTV Now live service, and this is the norm, not the exception. There was also a blog post saying it’s important for Disney and BAMTech to “own not just content assets, but also delivery infrastructure.” But BAMTech doesn’t own any delivery infrastructure, they use third-party CDNs.

In the news round up of Amazon and Hulu, multiple blogs are also implying that Netflix also uses Amazon to deliver Netflix videos. Statements like, “Netflix depends on Amazon to deliver its ever-growing library of shows and movies to customers“, is not accurate. Yes, Netflix relies heavily on Amazon’s cloud services, but not for video delivery. Netflix delivers all of their videos from their own content distribution network and doesn’t use Amazon’s CloudFront CDN for video delivery at all.

When it comes to Disney’s new ESPN OTT service, due out in 2018, and their Disney branded movie/content service due out in 2019, some have said third-party content delivery networks, and in particular Akamai, will see a “boost” or “great benefit” from these new services. But the realty is, they won’t. And not just Akamai, but any of the third-party CDNs that BAMTech uses, of which they use many. If you just run the numbers, you can see what the value of a contract from Disney would be worth, to any third-party CDN, specific to the bits consumed. If ESPN had 3M subs from day one, which they won’t, and each user watched 5 hours a day, with 50% of their viewing on mobile and 50% on a large screen, each user would consume about 130GB of data per month. At a price of about $0.004 per GB delivered, each viewer would be worth about $0.52 to a CDN.

And with BAMTech using multiple CDNs for their live streaming, if three CDNs all got 1/3 of the traffic, the value to each CDN would be worth just over $500k per month. But ESPN won’t have 3M subs from day one, so the value would be even lower. For a company like Akamai that had $276M in media revenue for Q2, an extra $1M or less in revenue per quarter, isn’t a “boost” at all. So for some to write posts saying ESPN’s new OTT service could be a “large business opportunity for the company [Akamai],” it’s simply not true. Reporters should stop using words like “large” and “big” when discussing opportunities in the market, if they aren’t willing to define them with metrics and actual numbers.

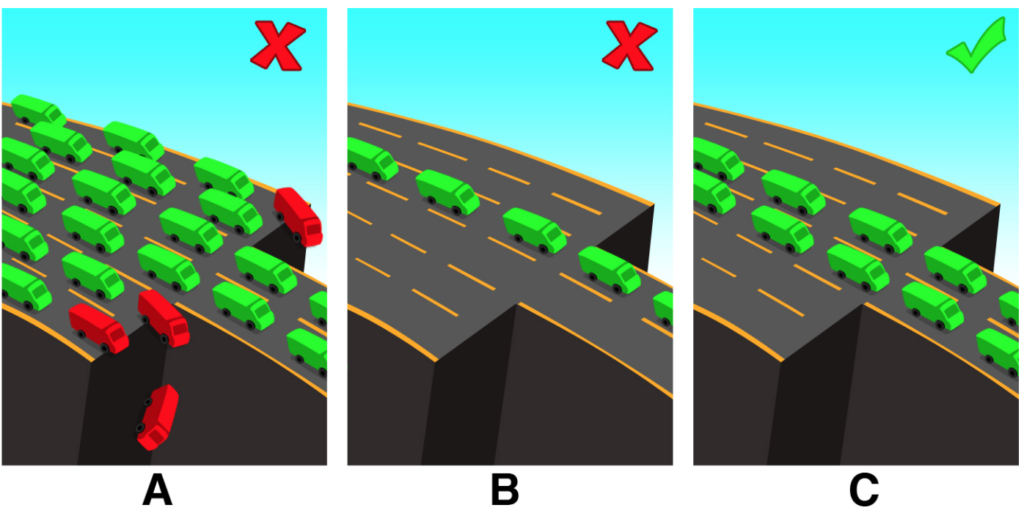

Of course, it’s impossible to have perfect knowledge of the networks ahead of time but it is possible to get a much better estimate based on prior knowledge of network conditions. If estimates are close to correct, the TCP slow start problem can be enormously improvd upon. The contention that PacketZoom makes is not that TCP has never improved in any use case. Traditionally, TCP was set to 3 MSS (maximum segment size, the MTU of the path between two endpoints). As networks improved, this was set to 10 MSS; then Google’s Quick UDP Internet Connections protocol, in use in the Chrome browser, raised it to 32 MSS.

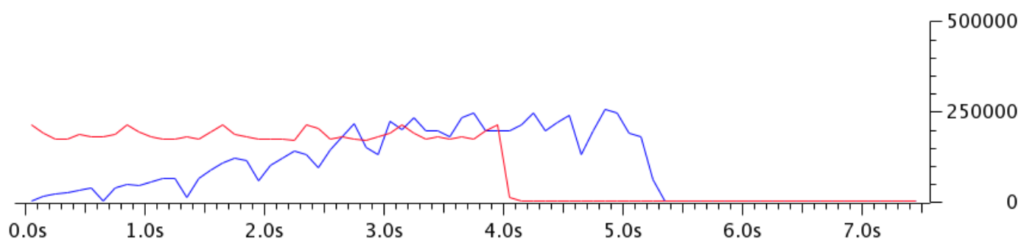

Of course, it’s impossible to have perfect knowledge of the networks ahead of time but it is possible to get a much better estimate based on prior knowledge of network conditions. If estimates are close to correct, the TCP slow start problem can be enormously improvd upon. The contention that PacketZoom makes is not that TCP has never improved in any use case. Traditionally, TCP was set to 3 MSS (maximum segment size, the MTU of the path between two endpoints). As networks improved, this was set to 10 MSS; then Google’s Quick UDP Internet Connections protocol, in use in the Chrome browser, raised it to 32 MSS. TCP started with a very low value and took 3 seconds to fully utilize the bandwidth. In the controlled experiment shown by the red line, full bandwidth was in use nearly from the start. The blue line also shows some pretty aggressive backoff that’s typical of TCP. In cricket, they often say that you need a good start to pace your innings well, and to strike on the loose deliveries to win matches. PacketZoom says that in their case, the initial knowledge of bottlenecks gets the good start. And beyond the start, there’s even more that they can do to “pace” the traffic and look for “loose deliveries.” The payoff to these changes would be a truly big win: a huge increase in the efficiency, and speed, of mobile data transfer.

TCP started with a very low value and took 3 seconds to fully utilize the bandwidth. In the controlled experiment shown by the red line, full bandwidth was in use nearly from the start. The blue line also shows some pretty aggressive backoff that’s typical of TCP. In cricket, they often say that you need a good start to pace your innings well, and to strike on the loose deliveries to win matches. PacketZoom says that in their case, the initial knowledge of bottlenecks gets the good start. And beyond the start, there’s even more that they can do to “pace” the traffic and look for “loose deliveries.” The payoff to these changes would be a truly big win: a huge increase in the efficiency, and speed, of mobile data transfer.