Inside Apple’s Live Event Stream Failure, And Why It Happened: It Wasn’t A Capacity Issue

Apple’s live stream of the unveiling of the iPhone 6 and Watch was a disaster today right from the start, with many users like myself having problems trying to watch the event. While at first I assumed it must be a capacity issue pertaining to Akamai, a deeper look at the code on Apple’s page and some other elements from the event shows that decisions made by Apple pertaining to their website, and problems with how they set up storage on Amazon’s S3 service, contributed the biggest problems to the event.

Unlike the last live stream Apple did, this time around Apple decided to add some Javascript JSON (JavaScript Object Notation) code to the apple.com page which added an interactive element on the bottom showing tweets about the event. As a result, this was causing the page to make refresh calls every few milliseconds. By Apple making the decision to add the JSON JavaScript code, it made the apple.com website un-cachable. By contrast, Apple usually has Akamai caching the page for their live events but this time around there would have been no way for Akamai to have done that, which causes a huge impact on the performance when it comes to loading the page and the stream. And since Apple embeds their video directly in the web page, any performance problems in the page also impacts the video. Akamai didn’t return my call asking for more details, but looking at the code shows there was no way Akamai could have cached it. This is also one of the reasons why when I tried to load the Apple live event page on my iPad, it would make Safari quit. That’s a problem with the code on the page, not with the video.

Because of all the refresh calls from the JSON-related JavaScript code, it looks like it artificially forced the player to degrade the quality of the video, dropping it down to a lower bitrate, because it thought there were more requests for the stream than there was. As for the foreign language translation that we heard for the first 27 minutes of the event, that’s all on Apple as they do the encoding themselves for their events, from the location the event is at. Clearly someone on Apple’s side didn’t have the encoder set up right and their primary and backup streams were also way out of sync. So whatever Apple sent to Akamai’s CDN is what got delivered and in this case, the video was overlaid with a foreign language track. I also saw at least one instance where I believe that Apple’s encoder(s) were rebooted after the event had already started which probably also contributed to the “could not load movie” and “you don’t have permission to access” error messages.

Looking at the metadata from the event page, you could see that Apple was hosting content from the interactive element on the apple.com event page on Amazon’s S3 cloud storage service. From what I can tell, it looks like Apple set up the content in a single bucket on S3 with little to no cache hit ratio, with poor bucket configuration. Amazon didn’t reply to my request for more info, but it’s clear that Apple didn’t set up their S3 storage correctly, which caused huge performance issues when all the requests hit Amazon’s network in a single location.

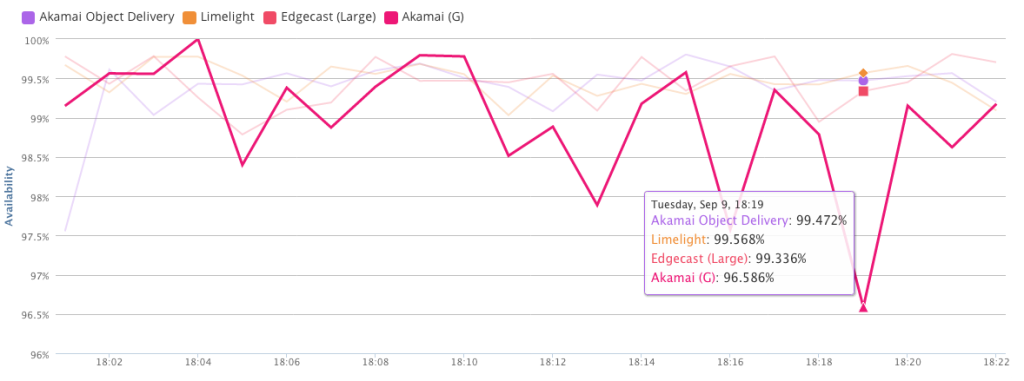

As for Akamai’s involvement in the event, they were the only CDN Apple used. Traceroutes from all over the planet (thanks to all who sent them in to me) showed that Apple relied solely on Akamai for the delivery. Without Akamai being able to cache Apple’s webpage, the performance to the videos took a huge hit. If Akamai can’t cache the website at the edge, then all requests have to go back to a central location, which defeats the whole purpose of using Akamai or any other CDNs to begin with. All CDNs architecture is based on being able to cache content, which in this case, Akamai clearly was not able to do. The below chart from third-party web performance provider Cedexis shows Akamai’s availability dropping to 98.5% in Eastern Europe during the event, which isn’t surprising if no caching is being used.

The bottom line with this event is that the encoding, translation, JavaScript code, the video player, the call to S3 single storage location and the millisecond refreshes all didn’t work properly together and was the root cause of Apple’s failed attempt to make the live stream work without any problems. So while it would be easy to say it was a CDN capacity issue, which was my initial thought considering how many events are taking place today and this week, it does not appear that a lack of capacity played any part in the event not working properly. Apple simply didn’t provision and plan for the event properly.

The bottom line with this event is that the encoding, translation, JavaScript code, the video player, the call to S3 single storage location and the millisecond refreshes all didn’t work properly together and was the root cause of Apple’s failed attempt to make the live stream work without any problems. So while it would be easy to say it was a CDN capacity issue, which was my initial thought considering how many events are taking place today and this week, it does not appear that a lack of capacity played any part in the event not working properly. Apple simply didn’t provision and plan for the event properly.

Updated Thursday Sept. 9th: From talking to transit providers & looking at DeepField data, Apple’s live video stream did 6-8Tbps at peak. World Cup peak on Akamai was 6.8Tbps. So the idea that this was a capacity issue isn’t accurate and the event didn’t generate some of the numbers I see people saying, like “hundreds of millions” watching the stream.

Updated Thursday Sept. 9th: While some in the comments section want to argue with me that problems with the Apple.com webpage didn’t impact the video, here is another post from someone who explains, in much better detail than me, many of the problems Apple had with their website, that contributed to the live stream issues. See: Learning from Apple’s livestream perf fiasco