Updated: [Companies Should Reject Licensing Terms From HEVC Advance Patent Pool]

In March, a new group named HEVC Advance announced the formation of a new patent pool [see: New HEVC Patent Pool Launches Creating Confusion & Uncertainly In The Market] with the goal of compiling over 500 patents pertaining to HEVC technology. The pool of patent holders, which is “expected” to include GE, Technicolor, Dolby, Philips, and Mitsubishi Electric has just announced their royalty rates and are going directly after content owners and CE manufacturers. HEVC Advance wants 0.5% of content owners attributable gross revenue for each HEVC Video type. To put in perspective how unjust and unfair their licensing terms are, they want 0.5% of Netflix, Apple, Facebook, Amazon and every other content owner/distributor’s revenue, as it pertains to HEVC usage. Considering that most content owners and distributors plan to convert all of their videos over time to use the new High Efficiency Video Coding compression standard, companies like Facebook, Netflix and others would have to pay over $100M a year in licensing payments. The licensing terms apply to all content services that get revenue from advertising, subscription and PPV – which pretty much equals every content owner, OTT provider, broadcaster, sports league, satellite broadcaster and cable provider you can think of.

Making matters worse, HEVC Advance says their licensing terms [listed in detail here] are “retroactive to date of 1st sale”, so companies would be required to make payments on content they have already distributed using HEVC. In addition to content owners, HEVC Advance is also going after CE manufacturers of TV, mobile and streaming devices. TV manufacturers would have to pay $1.50 per unit and mobile devices incur a cost of $0.80 per unit. Streaming boxes, cable set-top-boxes, game consoles, Blu-ray players, digital video recorders, digital video projectors, digital media storage devices, personal navigation devices and digital photo frames would cost a manufacturer $1.10 per unit.

While HEVC Advance is quick to say how “fair and reasonable” their terms are, they aren’t. The best way to describe their terms would be unreasonable and greedy. MPEG LA, another licensing body for HEVC patents, charges CE manufacturers $0.20 per unit after the first 100,000 units each year (no royalties are payable for the first 100,000 units) up to a current maximum annual amount of $25M. HEVC Advance’s rates for TV manufacturers is seven times more expensive than MPEG LA’s licensing fees. In addition, MPEG LA charges content owners nothing for utilizing HEVC technologies in their business. (Updated 7/24: Removed reference to the licensing terms for AVC to make it easier to compare pricing)

Licensing groups typically don’t go after content owners; instead they go to hardware and platform vendors in the market who are customers of content distributors. But in this case, HEVC Advance is going directly after content owners and isn’t asking CDNs, encoding vendors or others in the video ecosystem to license their patents. What HEVC Advance doesn’t grasp is that this approach of trying to get a share of content owners revenues has been tried in the past and failed miserably. MPEG-4 Part 2, the original MPEG-4 video compression that pre-dated AVC failed in the market because of this licensing approach. Content owners are not willing to share in their revenue, and HEVC Advance is taking a fatal flaw in their approach. The fact that they think someone like Facebook, Apple or Netflix is going to hand over tens if not hundreds of millions of dollars to them, each year, shows just how delusional they really are. While I don’t expect HEVC Advance to get any traction with content owners, their licensing terms could have some major impacts in the industry. Right now, content licensing deals around streaming media services do not account for the cost of royalty payments. So if more money is required to play back higher-quality video, content licensing costs will go up, and consumers are going to foot the bill for higher priced streaming services

Another big problem is that there are no caps on the proposed royalties. This creates an immense burden for Internet-based technologies and software applications that may be looking to incorporate HEVC, since there is, in most cases, no realistic way of predicting what percentage of your content will be consumed using HEVC each month. Secondly, the 0.5% royalty on all revenues attributable to HEVC-based content is, to put it mildly, a loose cannon. This umbrella is intended to cover both direct revenue such as from subscription and PPV services as well as indirect revenues such as advertising supported business models. One could argue that the definition could be pushed so far as to cover the purchase price of merchandise that is advertised using HEVC-compressed video. If Zappos has a product video of a shoe on their website encoded using HEVC, Zappos would have to give a percentage of the sale of that show to HEVC Advance. Furthermore, this is 0.5% of gross revenues, not net profit, and so it is effectively a “compression tax” that spans content licensing fees and all content delivery expenses that any content service provider needs to generate in order to be profitable. Recall again that there is no cap on fees, and you can see how this one’s almost inevitably headed to the courts.

Currently, these terms only capture B2C applications such as social media, streaming video and Pay TV but licensing terms for B2B use cases such as video conferencing, video surveillance and enterprise video webcasting are still being considered by HEVC Advance. It is conceivable that those use cases will not be licensed at the same onerous terms as the content-based fee, but the burden generated by the current B2C licensing terms is almost certain anyway to increase the perceived risk by enterprises of adopting HEVC. This is a shame because HEVC has the potential to begin to transform the video delivery infrastructure, and was finally approaching an inflection point where shipments and usage was projected to rise to meaningful levels. 4K content is already reeling under the pressures of unviable economics. From limited improvement in visual quality to storage and transmission costs that rise at a much higher multiple than monetization can, the financial argument for 4K was already hurting for the vast majority of use cases. The present curveball thrown by HEVC Advance further impacts this already risky value proposition.

Adding insult to injury, HEVC Advance has yet to provide any details on which patents they expect to be in the pool. The company has said they will have more details to share later in the year, yet they acknowledge that the patents have not yet gone through an independent patent evaluation process, which they expect to start next month. HEVC Advance’s CEO also mentioned that some of the patent owners that are “expected” to be in the pool, are still finalizing their paperwork, so there is no confirmation yet of exactly which companies are officially in the pool. No patents have as yet been identified by HEVC Advance as being essential to HEVC, even though HEVC Advance says many of the patents are essential. In other words, there is still room for patent holders to take the responsible road and monetize their intellectual property in a fairer, more scalable and more industry-friendly manner, on their own, or in other pools other than HEVC Advance.

While some content owners have told me they feel these rates don’t apply to them since they use cloud providers to encode and deliver their content, that’s not a valid argument. They are not protected in their contracts with vendors when the vendors are not required to have to license the technology. If content owners band together and agree not to license from HEVC Advance, which is what I suggest they do, HEVC Advance will fail in the market and be forced to change strategy, or change their terms to be fair and reasonable. Since HEVC Advance is simply a licensing body, they can’t sue anyone. The actual patents holders would have to legally go after thousands of content owners and CE manufacturers, which I don’t see someone like Technicolor, Dolby, Philips, and Mitsubishi Electric having the time or energy to do. But make no mistake, there is a lot at stake here. 0.5% of a market that is over $100B a year is a lot of money that HEVC Advance is going after.

Another interesting impact this could have on the industry is the potential for content owners and CE manufacturers to move away from HEVC and adopt Google’s competing VP9 codec, which requires no licensing. This might be hard for many who have already tied their services to hardware, but nothing is stopping them from re-encoding their library to use VP9 for playback via the web and apps and then only use HEVC for playback tied to TVs and other hardware devices. HEVC Advance’s licensing terms definitely put VP9 in the spotlight and are going to have many content owners running the numbers to see how much money they can save, if they had to pay the royalties, versus the cost to re-encode into VP9. The whole reason content owners are moving to HEVC is better quality, with fewer bits, which equals a cheaper cost. If the cost savings is now erased by new royalty payments for HEVC technology, what’s the point of using HEVC over VP9? This is a question many content owners are going to start asking themselves.

The bottom line is that HEVC Advance is bad for the industry, for consumers, for the growth of 4K and in my two calls with the company, it’s clear that lawyers are driving the licensing, not technology people. The company was under the impression that content owners deliver their own content, which most don’t since they use third-party CDNs. So even if a content owner wanted to license patents from HEVC Advance, they don’t currently have the data that’s needed to determine what they pay. This means they would have to spend more money and time to to set up a data collection process, in addition to the added cost of the license. HEVC Advance thinks content owners and distributors track what percentage of their content is consumed via different codecs, which isn’t accurate, and they were dumbfounded when I told them that. Almost all of their answers to my questions was that they would make it “easy” for companies and “work with them”, but of course they don’t understand the basics and don’t have the skills to even know what kind of help content owners would need.

HEVC Advance excels when it comes to being vague, speaking in lawyer terms, not being specific, and showcasing their complete lack of understanding of the market they are going after. Frankly, it’s insulting that their press release from today speaks to how they are providing “efficient and transparent access to patents”, yet, have provided no details on any of the actual patents. This pool is completely incompetent and lacks any real understanding of the market.

This won’t be the last we’re hearing about patents pertaining to HEVC and higher-quality video/audio as Dolby has already told content owners that they should expect another royalty, as it pertains HDR in addition to HEVC. So don’t assume that HEVC and 4K are going to get the kind of traction that many are predicting. (Updated 7/23: Edited post to reference Google’s VP9 codec, not VP10)

Frost & Sullivan Analyst Avni Rambhia contributed to this post.

Note: I am available to talk to any content owner, vendor, member of the media or anyone else who wants details on all of this. This is bad for everyone, and I am making it my job to try to educate everyone as much as possible. I have already had multiple calls with lawyers and intellectual property groups at content firms, MSOs and others potentially impacted by this. All calls are off-the-record and confidential. I can be reached anytime at 917-523-4562 or mail@danrayburn.com

![]() News site The Australian is reporting that Telstra plans to spin of Ooyala, the online video platform provider they purchased in August of last year, with an IPO on the NYSE or NASDAQ. I’ve been hearing these rumors for a few weeks now and while it would be good to see another online video platform provider join Brightcove as a public company, Ooyala’s not setting realistic expectations on the size of the market they are in, or how quickly they can grow.

News site The Australian is reporting that Telstra plans to spin of Ooyala, the online video platform provider they purchased in August of last year, with an IPO on the NYSE or NASDAQ. I’ve been hearing these rumors for a few weeks now and while it would be good to see another online video platform provider join Brightcove as a public company, Ooyala’s not setting realistic expectations on the size of the market they are in, or how quickly they can grow.

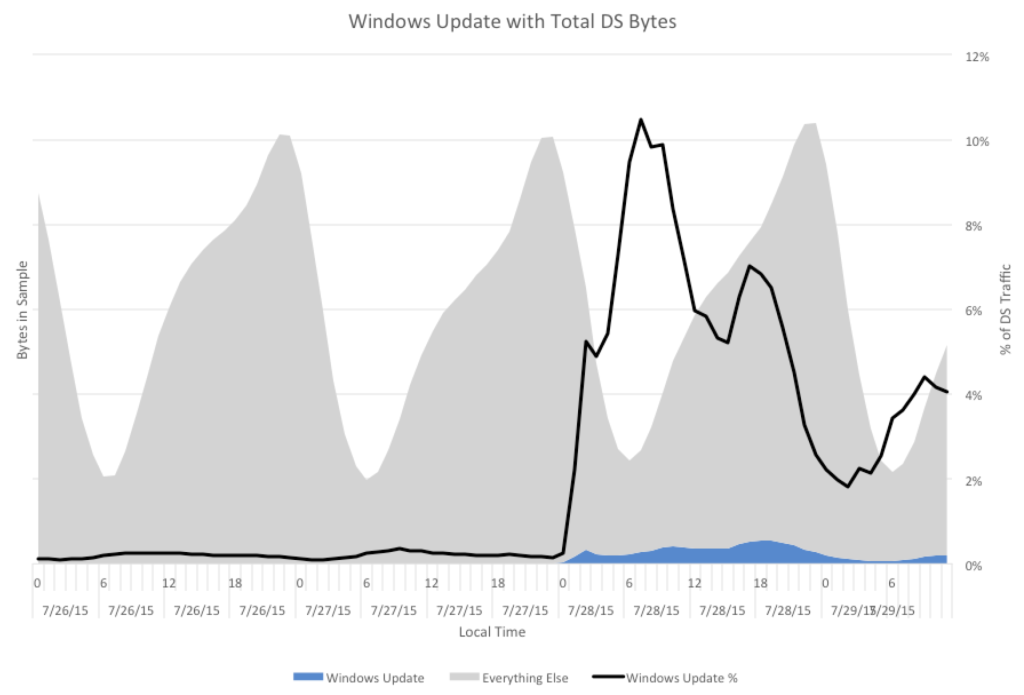

(Update: Tuesday July 28th: As of 1pm ET, the Windows 10 launch is already massive with traffic over 10Tb/s.) I’ve never used the term “break the Internet” because most of the time people say that, they are simply overhyping an event on the web. But with the volume of downloads that Microsoft is expecting for the launch of Windows 10 and the capacity they have already reserved from third-party CDNs to deliver the software, the Internet is in for some real performance problems this week. Based on numbers I am hearing from multiple sources, Microsoft has reserved up to 40Tb/s per second of capacity from all of the third-party CDNs combined. To put that number in perspective, some of Apple’s recent largest live events on the web have peaked at 8Tb/s. Windows 10 is expected to be five times that and will easily be the largest day/week of traffic ever on the Internet. QoS problems are to be expected, especially since all of the CDNs will be rate limiting their delivery of the 3GB download and many ISPs will max out interconnection capacity in certain cities.

(Update: Tuesday July 28th: As of 1pm ET, the Windows 10 launch is already massive with traffic over 10Tb/s.) I’ve never used the term “break the Internet” because most of the time people say that, they are simply overhyping an event on the web. But with the volume of downloads that Microsoft is expecting for the launch of Windows 10 and the capacity they have already reserved from third-party CDNs to deliver the software, the Internet is in for some real performance problems this week. Based on numbers I am hearing from multiple sources, Microsoft has reserved up to 40Tb/s per second of capacity from all of the third-party CDNs combined. To put that number in perspective, some of Apple’s recent largest live events on the web have peaked at 8Tb/s. Windows 10 is expected to be five times that and will easily be the largest day/week of traffic ever on the Internet. QoS problems are to be expected, especially since all of the CDNs will be rate limiting their delivery of the 3GB download and many ISPs will max out interconnection capacity in certain cities.