Find Anomalies: It’s Time for CDN’s to Use Machine Learning

CDNs play a vital role in how the web works, however the volume, variety and velocity of log files that CDNs generate can cause issue detection and mitigation to become exceedingly difficult. In order to overcome this data challenge, you need to first gain an understanding of your normal CDN patterns, and then identify activity that deviates from the norm—in real time. This is where machine learning can take CDN services to the next level.

The Challenges

CDN operators ought to be immediately notified about sudden increases in bandwidth consumption at the PoPs or proxies in a network in order to take corrective action. The identification process begins by understanding which sources (customers) were the causes of abnormal peaks in bandwidth consumption. Without the ability to quickly obtain insights from fresh data, you won’t be able to foresee and prevent issues from escalating into full blown headaches.

Another challenge arises from CDN system upgrades. In order to run A/B testing on specific segments of proxies, it is best to gradually deploy system upgrades. That way, you can better prevent the possibility of widespread errors. Today, CDN providers still lack the real-time visibility that is needed to address basic issues such as an increase in HTTP errors, IO access or cache churn rates. This pitfall results in delayed upgrade releases, which in turn directly impact CDN providers’ pace of innovation.

I recently had an interesting discussion about these challenges with David Drai, Founder of Anodot. In the past, Drai co-founded Contendo, a system that optimizes CDN consumption. In 2012, Contendo was acquired by Akamai and Drai became the CTO of EMEA at Akamai. David said one of the main cases he remember revolved around a version release. “We had this bug that we didn’t uncover during an A/B test of the version and we released it to all network proxies. As the person in charge, it was a complete disaster.”

Log Analytics Is Just Not Good Enough

In order to cope with these challenges, CDN providers leverage log analytics systems that gather and record billions of transaction logs from relevant proxies. In most cases, these tools are built in-house and are used to run queries and retrieve insights about network performance. But that doesn’t suffice. In some cases, these are legacy systems that don’t scale and these tools are typically not intelligent enough to automatically provide results in real time. Therefore, a report that is generated may be based on relatively old data, which, in turn, would result in delayed or outdated responses.

“Say a CDN operator wants to get information about a specific customer’s RPS consumption rate per proxy and per PoP for a whole month. To obtain this information, the log management solution needs to scan billions of customer logs and extract the desired customer’s transactions, which can take days,” Drai explained. Another challenge CDN providers face is related to visibility into the operator’s network performance. CDN providers use tools such as Keynote, Gomez and Catchpoint that measure network latency, to switch to other providers if and when the need arises. However, although these solutions provide insights in real time, it is still a challenge to correlate current issues with an operator’s performance.

David says that “when dealing with CDN issues, time is of the essence. The user download rate of one of our gaming customers at Contendo decreased by 10-15% due to an issue that took us almost a week to detect. In the world of CDN, that kind of delay can significantly damage the CDN provider’s reputation.” Last but not least, one of the main issues with most traditional analytics systems as well as modern log management tools is manual, preconfigured dashboards, reports and alerts. In the dynamic world of CDN, there is no limit to new issues and information that can be extracted from the vast amount of available data.

We Need a Different Approach

What if we could predict a bottleneck in an internet router not solely based on simple BGP rules, but on true science? Over the last decade, data analytics technologies have evolved from complex and cumbersome solutions to modern and flexible big data solutions such as Hadoop. Over the last few years, these big data technologies, including Cassandra and MongoDB, have gained the industry’s trust and have become an important component in every IT environment. The next step involves incorporating new analytics solutions that allow you to run queries on top of these data engine – think about Google analytics for CDN providers.

However, with all of the great advancements that are being made in the realm of big data, the existing monitoring tools aren’t enough. As noted above, current monitoring tools are based on human analysts that define and create flat reports and dashboards. Even with numerous different reports, when it comes to CDN patterns, reality has proven that you can’t cover all cases and be notified in real time about current abnormal behavior development. It is simply not feasible when you talk about tens of thousands of different data points in multiple dimensions.

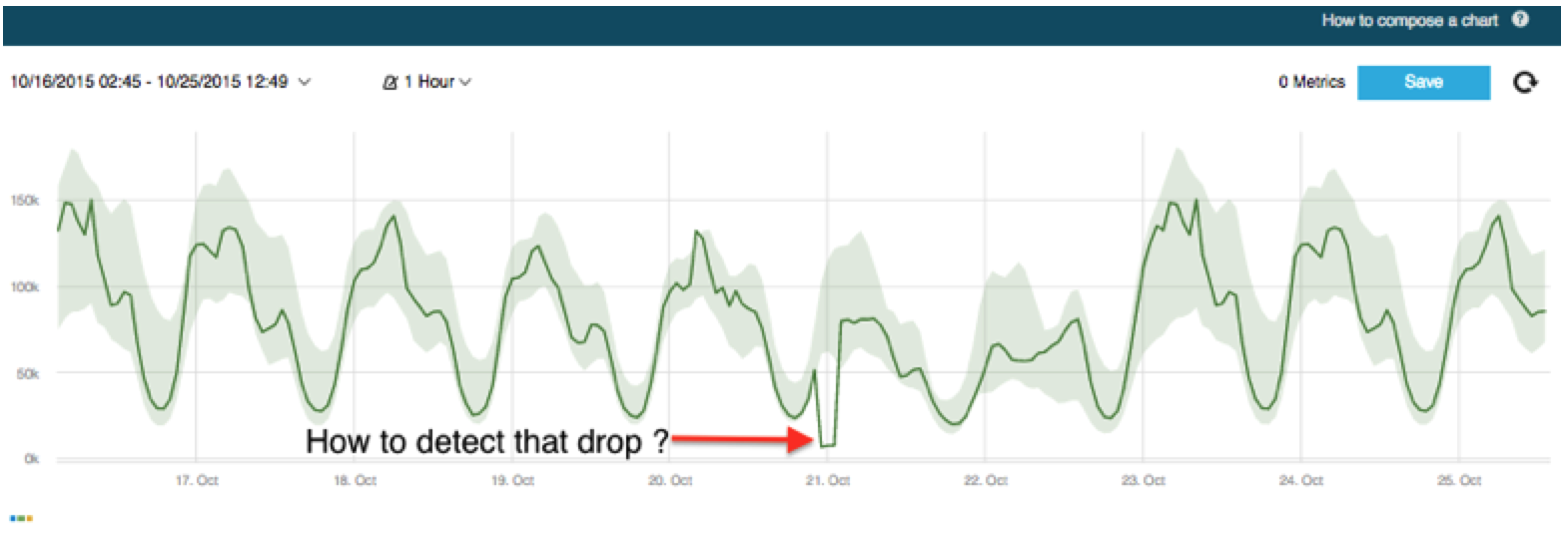

The next step in the world of analytics is machine learning. The ultimate solution is to automate data-based learning, then develop insights and make relevant predictions. This new discipline involves running pattern recognition algorithms and predictive analytics. And while it may seem far fetched, it is already in the works by Drai and his team at Anodot. They are aiming to solve the CDN challenges outlined above as well as additional use cases that predictive analytics solutions can help with. David says Anodot’s algorithms learn and continuously define CDN normal behaviors, and can therefore send out alerts about anomalies and automatically correlate between different data points. For example, the system will alert only if there is an increase in the number of HTTP errors across several proxies. “The key is zero human configurations.”

Final Note

Final Note

In a previous article, I wrote about Apple’s multi-CDN strategy. Think about a world where advanced analytics systems predict bottlenecks and automatically route traffic to its most appropriate CDN and optimal proxy. Predictive analytics can be a great solution to the challenges that CDN providers have faced for years now and it seems like machine learning solutions such as Anodot will be able to take this industry to a new level, creating great new development opportunities into a world that has suffered from complexity and a lack of visibility for years.